- The Strategist

- Posts

- Take your AI to the Next Level with this One Weird Trick

Take your AI to the Next Level with this One Weird Trick

I used the meme headline!

Believe it or not, while I hate the fact that I used this headline because it’s so SEO cringe, it is true.

There’s a weird trick to improve AI output. And it’s funny because I don’t see many people use it. I don’t see anyone use it, in fact. So, I guess this is also an “exclusive offer.”

I discovered this prompt engineering hack, and others may have discovered it. So, I won’t claim to be the sole inventor. But I’ve found it helpful and haven’t seen anyone else use it.

It will often help solve a particular class of “reasoning” problems better than chain-of-thought reasoning built into the reasoning models. However, I haven’t tried it in combination with chain-of-thought yet.

Strawrberry

How many rs are in strawberry?

Three. It's not a tricky question.

LLMs have a notoriously hard time with it, though. Because LLMs are just heuristic blog-interpolators. There aren’t many blogs on how many letters are in things, so there’s not much in the training set for those LLMs to know the answer here.

Later models have “fixed” this, but that’s only because we discussed it, and that conversation was added to the training set. So now, the latest models know how many rs are in strawberry.

But they can’t generalize.

Lollapalooza

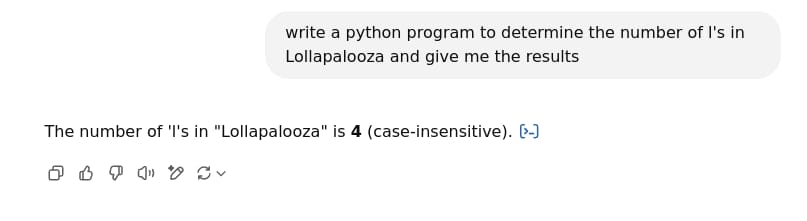

How many L’s are in Lollapalooza? It should be the same problem as strawberry, so can the LLM figure it out?

No, no, it cannot.

But there’s a trick you can use to solve problems like this and other reasoning problems. This trick will be helpful if you’re dealing with numbers, rules, logic, generalization, or other kinds of “math-y” thinking.

We’re going to fix the LLM by asking a slightly different question.

Run a Level 1 Diagnostic

First, I’ll show you the trick and then explain why it works. Ready? Here you go.

Ding! Ding! Ding! Correct!

You can even click the little blue arrow on the side to see the program.

Why does this work?

Neuro-Symbolic AI

Neuro-symbolic AI is an approach to AI that combines the best of heuristic models (in our case, LLM GPT models) and old-school “symbolic” AI approaches such as rules engines.

Many symbolic AI approaches from the 1960s through the 1990s are used in core algorithms today, such as the search tree for chess. However, outside of niche cases like these, they could never be used for any “general AI.” Thus, much of the research has fizzled out, especially with today's more common statistical approaches.

However, symbolic AI approaches dominate one central area of research and still have a considerable impact: programming language design.

Many programming languages were invented to apply AI ideas and become tools for AI. These language features are still used in many popular programming languages, and we’ve become accustomed to them helping ergonomics.

You have to recall that many “high-level” programming language approaches over the years were to try and make programming “more like English.” These features have helped LLMs do a reasonable job of code generation for simple problems.

Neuro-symbolic AI combines the best of both worlds. Heuristic models like LLMs are great at generating ideas, while symbolic approaches are good at checking them. Symbolic AI can reason, unlike heuristic AI. And since much of this automated reasoning is embedded in a programming language like Python, we can use LLM’s ability to write simple programs and programming’s ability to reason to allow for a hybrid approach to get closer to “general AI.”

Can you do better?

Yes!

I’d be happy to show you, but I want to hear from you at [email protected]. Please try the neuro-symbolic approach. How did you use it? Did it improve or degrade the results you’re used to?