- The Strategist

- Posts

- What is Agentic AI and How Can It Help You Understand Good Product?

What is Agentic AI and How Can It Help You Understand Good Product?

Beyond the Hype

“Agentic” AI is the latest in the round of hype fundraising hitting the AI circuit. Despite the name, it has little to do with the popular concept of AI “agents” running around in the background doing things for you.

It’s a new coat of paint around another concept that used to be called “tools.” Agentic AI is just an AI that can make web calls to various services. That’s it.

Now, this actually can be marginally useful. There are use cases for this. But because of the intentional confusion around the term “agent,” what will likely happen is once more, AI will fail to live up to expectations, and people will turn further away from it—a pity.

While tool usage is helpful, these aren’t entirely autonomous agents, despite the name. Asking why they aren’t autonomous is an interesting question. It also provides insight into a great product process.

Secret Agent Man: What’s Wrong With Agents?

The idea of AI autonomously doing something on your behalf fundamentally involves AI making decisions about what to do next, following through on those decisions, and then returning to making decisions about what to do next. It’s not unlike the OODA Loop.

This ultimately is a time series problem, similar to analyzing stock market returns.

I can take all the information and guess what TSLA might do tomorrow. Should I buy or sell? Even with all that information and the best models, there’s a lot of error. Stock picking is hard!

The problem gets compounded if I try to use the data from today to predict two days out. Now, based on that forecast, I have to forecast for tomorrow and then for the next day.

You’ve heard of the worries about AI consuming its slop and breaking the internet. What happens when AI is trained on the internet with only AI-generated content? It gets markedly worse. Well, this is the same problem.

An LLM may attempt to reason heuristically through a problem, and it has a chance of being right. It may even use a tool to obtain information from the Internet or take action on your behalf. The issue is that that is the best guess.

What the LLM does after that is now another best guess… based on a best guess.

You can hopefully see where this is going.

I saw early experiments with LLMs driving the command prompt to automate engineering. The system often eventually convinced itself to delete your hard drive, so it needed guard rails and a lot of babysitting.

There have been no breakthroughs since then. Minor incremental advances are all there are, followed by a lot of hype. Unfortunately, the same goes for most of AI.

The Man Behind The Curtain: How Humans Do It

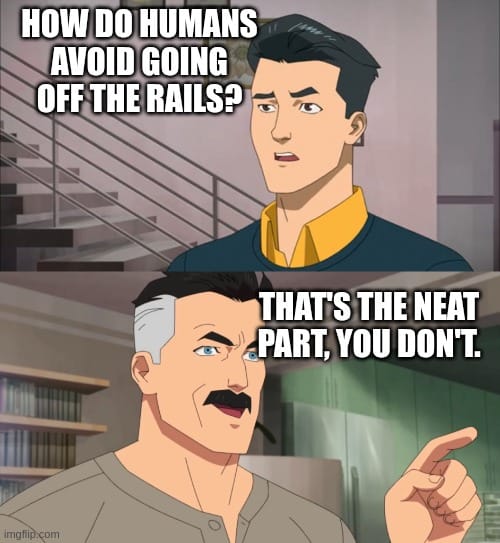

The interesting thought comes from figuring out how humans don’t succumb to this same time series problem. Why can we reason through things and get to the correct answer when LLMs go off the rails so quickly?

But humans always go off the rails! We encounter the same time series problem but manage to control it using a few methods.

Logical Reasoning

Humans can reason heuristically (type 1 in Kahneman’s thinking fast and slow) or symbolically (type 2).

Computers are, in fact, also capable of both. They just haven’t gotten very good at switching between them. LLMs are great heuristic machines, while traditional AI is good at symbolic reasoning.

Because humans can switch back and forth quickly, we can use logical reasoning to double-check our progress and ensure it’s coherent. We take our heuristic intuition and formally structure it according to some rules, then follow some deductions to determine whether it makes sense. It allows us to self-correct.

Talking to Others

Humans also check in with other humans on a reasonably frequent cadence. We ask each other, “Hey, does this make sense?” This allows us to self-correct by more or less running multiple forecasts out and averaging the results.

In software, we do this informally over Slack and formally in peer and design reviews.

In terms of products, we do this with frequent product demos with clients. The client will tell us if we’re building the right thing. Not frequently doing demos signs you up for the forecast problem—you will be making guesses based on guesses based on guesses.

Testing

In software, we write programs to check our code. These are called tests. They are a hybrid of logical reasoning and heuristic reasoning. However, in terms of products, ultimately, the market speaks—the market tests each product. Firms that build good products survive, and those that don’t fail. This is akin to running dozens of LLMs in parallel and only picking the answers that somehow succeed. It’s evolution, survival of the fittest.

Back to Product

We’ve discussed product here a bit. But I wanted to emphasize that the problem facing agentic AI—the forecast slop problem—is one we humans face every day. And we already know the solution.

Frequent check-ins, frequent requests for feedback, and formalized systems and rubrics all help us avoid succumbing to forecast slop.

Hopefully, you now better understand why all those methods are required to do a good product. You're signing up for failure if you set some genius in a room to divine what the customer wants and check in at 6 months. Why? Because of forecast slop, that’s why. It's the same reason you can’t just let an AI churn for 6 months and expect it to do anything but delete your hard drive.

Want to learn more about agentic AI or good product processes? Email me at [email protected] for a free Zoom call.